Alexa and Sitecore Integration - Part 2

Recap

As you may recall from the previous post - we enabled the OData Item Service in Sitecore and ran some test queries.

Now, it's time to create the "brains" behind the Alexa skill that will make use of the OData Item Service to allow the user to ask Alexa about dogs. In other words - we will give our content a voice :). Note: we will not delve too deeply into hows and why's of Alexa development as there are many ways of doing it in different languages and environments.

Alexa 101

Hopefully by now you the reader know what Amazon's Alexa is. If not - welcome to Planet Earth!

1. Alexa is a voice activated device that you can talk to to achieve a (hopefully) useful set of actions (called a Skill). You can activate a specific skill by saying a magic word that is called an invocation. You can think of a skill as an app that is invoked via a HTTP endpoint.

2. Once a skill is invoked - you can say specific phrases or utterances to carry out intents. Intents are mapped to functions that carry out some work and return a result which is then output vocally by Alexa. Utterances may contain dynamic spoken values that are passed to the function that handles the work.

3. Dynamic values within an utterance are called Slots. Slots can be associated with specific types eg. numbers, names, dates, a list of values etc. Slot values are passed to the associated intent (function) and can be used to dynamically query our app.

4. Alexa comes with a companion app that is installed on tablet or mobile devices that enable you to associate new skills with your Alexa device. In some cases you may be asked to authenticate using the companion app.

Creating the Alexa Skill.

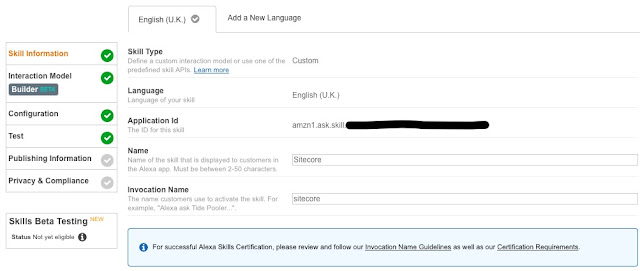

To define a skill you must first head over to https://developer.amazon.com/alexa and then sign in using your Amazon credentials. Once you're signed in - go to your Alexa Skills dashboard and click the link to create a new skill. For this demo I have used the Custom Skill option. In order to get your skill up and running you need to complete the Skill Information, Interaction Model, Configuration and Testing sections. I will provide some brief detail on these below.

Skill Information.

So obviously - when you create a Skill you need to let Amazon know a couple of things about your app. Firstly, the Name field is effectively the display name that you see for your skill in the Alexa companion app. Secondly, the Invocation field is where you enter the magic word(s) that will trigger your skill. When Alexa hears the invocation - it will listen out for utterances for that skill.

Note the Application Id which is a unique identifier for your skill that Amazon will generate. You will need the Application Id when creating the logic for the skill.

Interaction Model.

The Interaction Model screen is where you define the intents, utterances and slots that allow you to map phrases to specific functions. At its most basic - the interaction model is a JSON file that defines utterances, slots and slot types. However, Amazon has worked out that people make mistakes when hand crafting JSON so they've provided us with an Interaction Builder tool that provides a nice interface where we set up utterances and slots in a visual manner. Behind the scenes, the Interaction Builder actually creates the JSON for us!

As you can see from the screen shot below - I have created a number of intents DogByName, DogByPersonality, DogByType and ListDogs. Each intent will make our skill respond differently and can be triggered by one or more utterances ie. more than one way of saying something. You can also see how the slots within an utterances are highlighted in blue and enclosed in braces (eg. {Name}).

As you are working on your interaction model - it's a good idea to save it regularly so you don't lose your work. After you are happy with your model, click Build Model to validate and deploy your model so that it becomes usable / testable. If you then make changes to your model - you will need to click Build Model again in order for the changes to be reflected.

Configuration.

In the configuration section are two important things we must set, the Endpoint Type and Default URL / ARN. The Service Endpoint Type can either be a HTTPS URL or a AWS Lambda function - denoted by its ARN (see below). For this example, I have created a Node.JS script (DoggieData) which runs in a serverless container and is not tied to a server. Amazon does not force you to use Lambda and you can quite happily specify a URL for a service that you host.

In short - the endpoint URL is the brains behind your skill and where functions carry out the work of intents. Note: the endpoint is not the Sitecore OData Item Service.

The configuration section also has other optional settings for geolocated endpoints, and also whether you want to use account linking with your skill (more on this in the next post).

Testing.

Once you've configured an endpoint for your skill, the Testing section allows you to run an end to end test of your skill by entering your utterances and slots as text values. After you run the test, you can see the SSML that is generated. The generated SSML is what Alexa says back to you. If your endpoint is not ready and functional - testing will obviously not work.

If your skill is in Developer mode - you can also see and add it in the Your Skills section of the Alexa mobile app. Once you add your skill via the companion app - you can interact with it via the Alexa device by saying the invocation eg. Alexa - launch Sitecore.

Show me the code..

So now we've got the gist of setting up a skill let's look at the code. The example here is a single Javascript file that runs within a Node.JS environment. It is based on the Alexa Skills SDK. Note that any dependencies such as Node JS modules must be shipped with your code up to Amazon Lambda (or your choice of hosting environment).

'use strict';

const odata = require('odata-client');

const util = require('util');

var Alexa = require('alexa-sdk');

//set up config values from environment

var api_host = process.env.API_HOST;

var api_key = process.env.API_KEY;

var profile_host = process.env.PROFILE_HOST;

var alexa_skill_id = process.env.ALEXA_SKILL_ID;

//bootstrap Alexa

exports.handler = (event, context, callback) => {

var alexa = Alexa.handler(event, context);

//TODO: add profile code here later

alexa.APP_ID = alexa_skill_id;

alexa.registerHandlers(handlers);

alexa.execute();

};

//define handler functions for intents

var handlers = {

'LaunchRequest': function () {

//code here is called when we say the invocation only.

},

'Unhandled': function () {

//this code is called when there is an exception

this.emit(':tell', 'huh?');

},

'ListDogs': function () {

var handler = this;

//create odata client object and pass in config values

var q = odata({

service: "https://" + api_host + "/sitecore/api/ssc/aggregate/content",

resources: "Items",

custom: {

sc_apikey: api_key,

format: "json",

expand: "Fields(select=Celebrity,Description,Personality)"

}

});

q.filter("TemplateName","Dog") //filter by template name

q.expand("Fields") //get the fields defined in the expand property above

q.get() //make the request

.then(function (response) {

//handle response when complete

var body = JSON.parse(response.body);

if (body != null) {

if (body.value && body.value.length > 0) {

var result = "";

//build a string of dogs.

for (var i = 0; i < body.value.length; i++) {

result += body.value[i].Name + "<br>";

}

//Alexa will speak the following result.

handler.emit(":tell", "Here's a list of Sitecore Pooch's:" + result);

}

}

});

},

....

}

The beginning of the script specifies module dependencies and also sets up config variables that are pulled from the environment such as the Alexa Skill ID that are passed to the Alexa handler (provided by the SDK). This is how you associate your code to the Skill definition you set up in the Alexa Skills Dashboard earlier.

As you can see from the ListDogs function above, a odata client object is instantiated with parameters such as the API Host and path to the OData service and also the API Key. I've also defined extra fields such as Celebrity, Description and Personality that I want to retrieve as part of the OData request. The request is then by initiated by use of the .get() method. Finally the .then() promise is used to process the response we get back from Sitecore. The response data is parsed to build a string that is then emitted (generates SSML) to Alexa which then speaks the response back to the user.

So there you have it - a basic example of using an Alexa skill to "talk" to the OData Item Service in Sitecore 9. In the next post - we will dive deeper and see how we can bring XConnect into the mix to record the interactions we have with Alexa.

I hope you found this post useful. Stay tuned for the next one!

Comments

Post a Comment